|

I am a second-year Ph.D. student in EECS at MIT CSAIL, advised by Professor Pulkit Agrawal. I received my Bachelors degree (Summa Cum Laude) in Mechanical Engineering at Seoul National University. Previously, I was a full-time research scientist at NAVER LABS, developing machine/reinforcement learning algorithms to make robot arms like AMBIDEX perform various daily tasks. I also spent some time at Saige Research as an undergraduate research intern. Email / CV / Google Scholar / Twitter / Blog |

|

|

[2025 June 15]🚀 I started a blog where I share thoughts and tutorials on robotics, AI, and research life. Check it out! |

|

My dream is to see robots performing all sorts of complex, long-horizon and contact-rich manipulation tasks in real-world, just like we humans do everyday. One scalable way to acheive this dream is to pretrain robots with (a) a repertoire of reusable low-level motor control skills and (b) an intelligence that can temporally or spatially compose such skills to complete any given unseen tasks. What kind of low-level manipulation skills should we pretrain in advance, and how should we train them? With such reusable repertoire of skills, how should we train an intelligence that can orchestrate them to perform longer-horizon tasks? My upcoming works will mainly address these questions! * denotes equal contribution. |

|

|

|

Younghyo Park, Haoshu Fang, Pulkit Agrawal course website (6.S186) / lecture videos This course provides a practical introduction to training robots using data-driven methods. Key topics include data collection methods for robotics, policy training methods, and using simulated environments for robot learning. Throughout the course, students will have hands-on experience to collect robot data, train policies, and evaluate its performance. |

|

|

|

Younghyo Park, Pulkit Agrawal github / App Store / twitter / short paper An app for Apple Vision Pro that can stream user's head / wrist / finger tracking results to any machines connected to the same network. This can be used to (a) teleoperate robots using human motions and (b) collect datasets of humans navigating and manipulating the real-world! |

|

|

|

Younghyo Park, Jagdeep Bhatia, Lars Ankile, Pulkit Agrawal ICRA, 2025 project page / twitter DART is a teleoperation platform that leverages cloud-based simulation and augmented reality (AR) to revolutionize robotic data collection. It enables higher data collection throughput with reduced physical fatigue and facilitates robust policy transfer to real-world scenarios. All datasets are stored in the DexHub cloud database, providing an ever-growing resource for robot learning. |

|

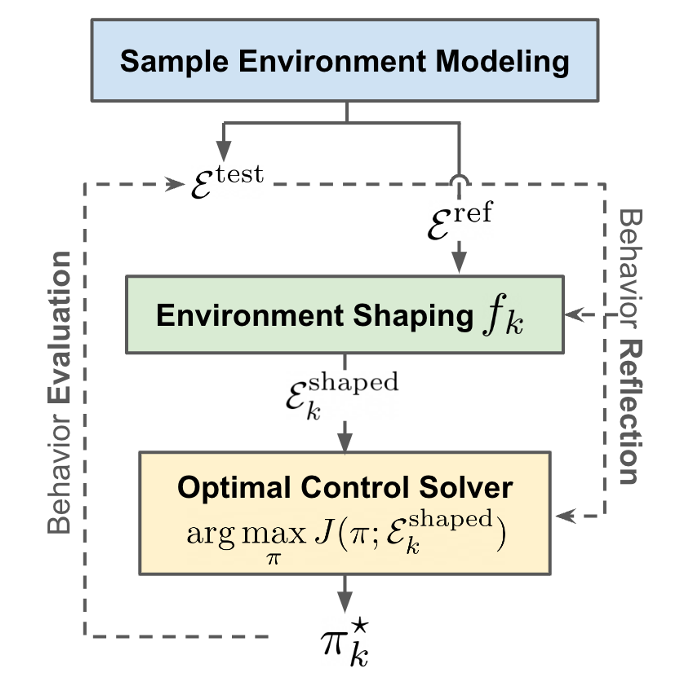

Younghyo Park*, Gabriel Margolis*, Pulkit Agrawal ICML, 2024 (Oral Presentation, Top 5%) project page / video / twitter Most robotics practitioners spend most time shaping the environments (e.g. rewards, observation/action spaces, low-level controllers, simulation dynamics) than to tune RL algorithms to obtain a desirable controller. We posit that the community should focus more on (a) automating environment shaping procedures and/or (b) developing stronger RL algorithms that can tackle unshaped environments. |

|

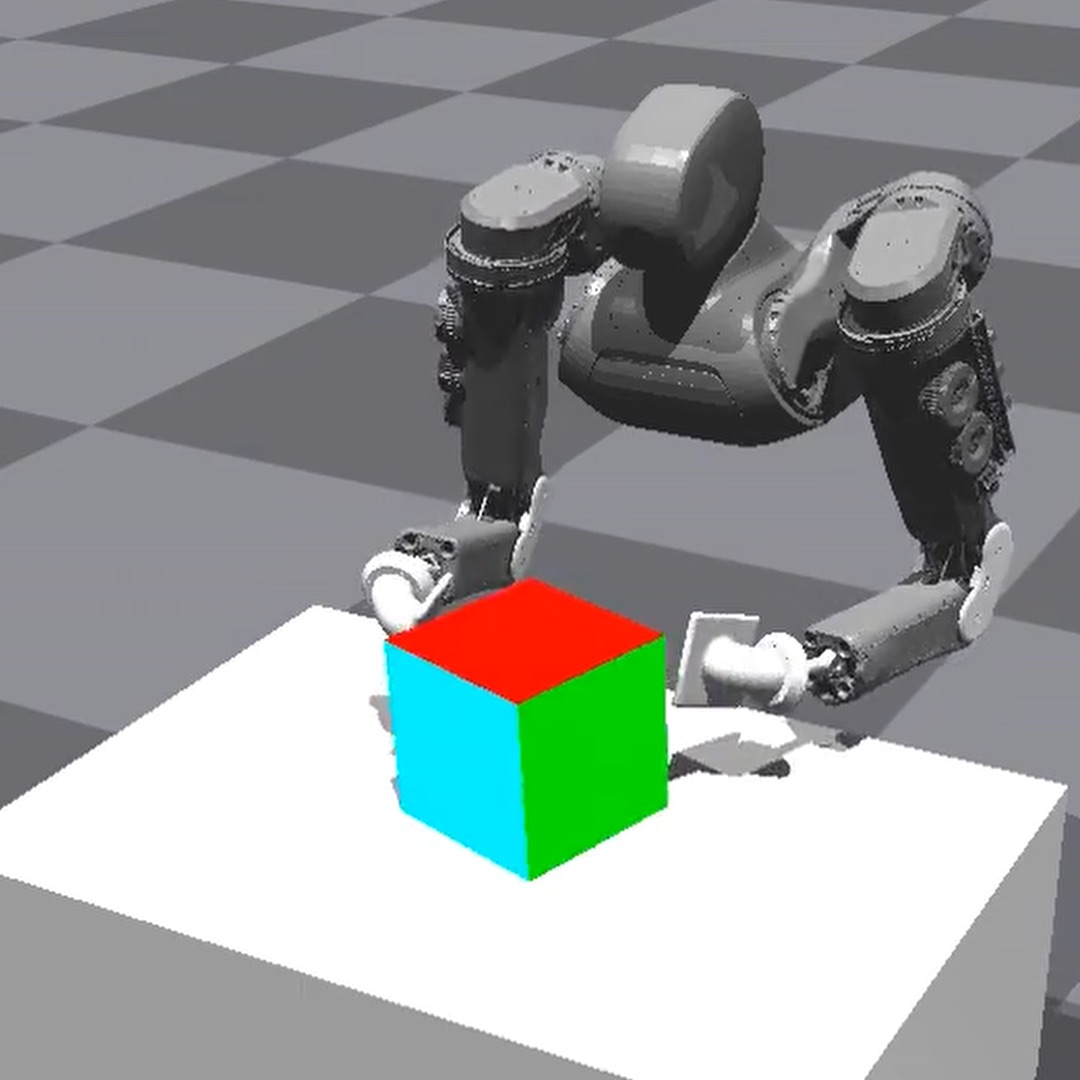

Sunin Kim*, Jaewoon Kwon*, Taeyoon Lee*, Younghyo Park*, Julien Perez ICRA, 2023 project page / video / twitter An algorithm that can discover diverse and useful set of skills from scratch that is inherently safe to be composed for unseen downstream tasks. Considering safety during skill discovery phase is a must when solving safety-critical downstream tasks. |

|

Younghyo Park*, Seunghoon Jeon*, Taeyoon Lee IROS, 2022 (Winner: Best Entertainment & Amusement Paper Award) project page / video / story / interview / paper Drawing robot ARTO-1 performs complex drawings in real-world by learning low-level stroke drawing skills, requiring delicate force control, from human demonstrations. This approach eases the planning required to actually perform an artistic drawing. |

|

Kyumin Park, Younghyo Park, Sangwoong Yoon, Frank C. Park Transactions on Mechatronics, 2021 paper We detect collisions for robot manipulators using unsupervised anomaly detection methods. Compared to supervised approach, this approach does not require collisions datasets and even detect unseen collision types. |

|

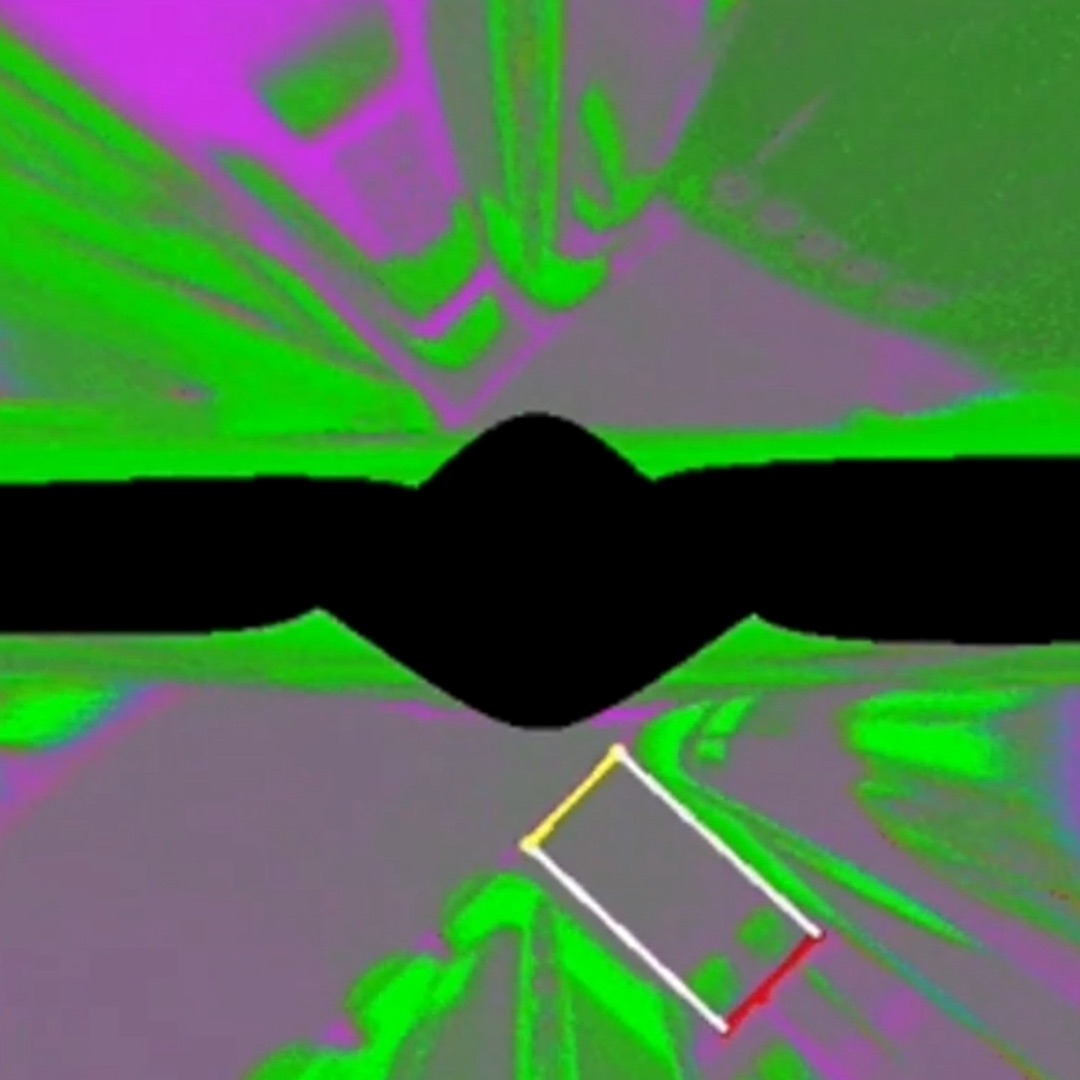

Younghyo Park, Joonwoo Ahn, Jaeheung Park ICRITA, 2022 paper Performs real-time parking slot detection and tracking for autonomous parking systems. |

|

Check out Jon Barron's repository for the template of this website. |